BigQuery is Google's cloud data warehouse that handles millions of rows without crashing, something Google Sheets can't do past 100,000 rows. The main barrier isn't technical complexity; it's the myth that you need SQL expertise. You don't. You need 6 pre-written queries, the ability to change a date, and 15 minutes for setup. This guide shows you how to get marketing data into BigQuery, analyze it with ready-to-use queries, and decide if it's worth upgrading from Sheets.

BigQuery vs Google Sheets: When Each Makes Sense

Why Marketers Actually Use BigQuery

You're running ads on Google, Meta, LinkedIn, maybe TikTok. Each platform dumps data in different formats. Facebook's CSV shows dates as 12/02/2025. Google Ads uses 2025-12-02. LinkedIn calls it "spend," Google calls it "cost," and your pivot table just broke because someone sorted column B without column A.

BigQuery fixes this by storing all your data in one place with consistent formatting. Query speed doesn't depend on your laptop. It runs on Google's servers. A query that would freeze Sheets for 45 seconds runs in 3 seconds in BigQuery.

The Value for Multi-Platform Marketers

When you're managing campaigns across multiple platforms, the manual work adds up fast. Weekly exports from each platform, formatting CSV files to match column names, fixing formulas when new campaigns launch, rebuilding broken pivot tables. This weekly ritual can easily consume several hours.

BigQuery eliminates this workflow entirely. Once your platforms are connected through a tool like Dataslayer, data flows automatically into standardized tables. Your weekly report becomes a saved query that runs in seconds instead of a manual reconstruction project. The time saved scales with the number of platforms you manage.

Setting Up Dataslayer to BigQuery (15 Minutes)

Before you can query data, you need to get it into BigQuery. Manual route: export CSVs weekly, format identically, upload manually. Realistic route: automate with Dataslayer.

Quick Setup Steps:

1. Create BigQuery Project

- Go to Google Cloud Console

- Click "New Project" → Name it

marketing_analytics - Copy your Project ID (looks like

marketing-analytics-123456)

2. Enable BigQuery API (Required)

- After creating the project, click "Enable" when prompted

- This must be done before creating datasets

3. Create Your Dataset

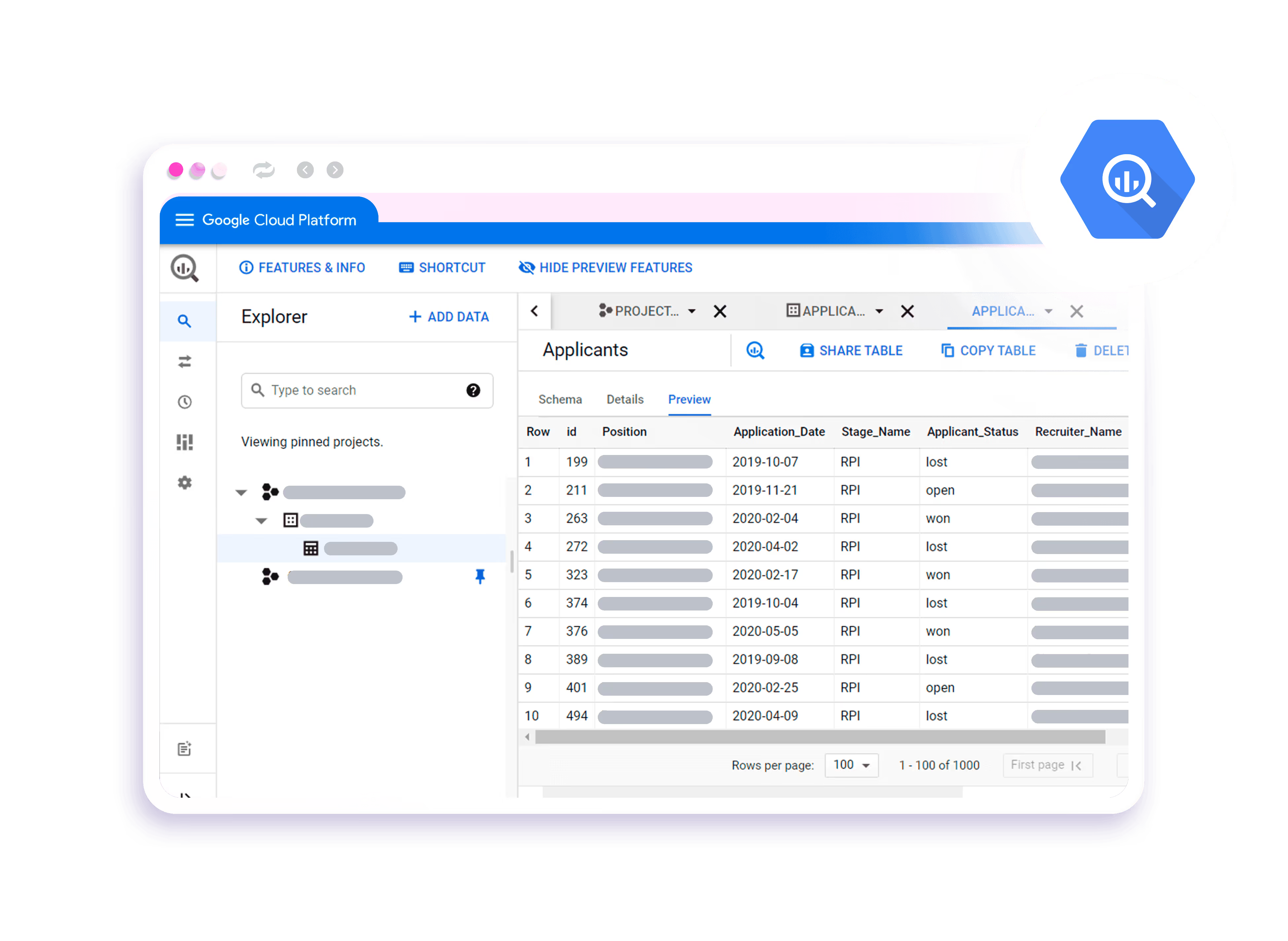

- In BigQuery Explorer → Right-click your project → "Create dataset"

- Dataset ID:

ad_data - Location: EU (Europe) or US (Americas)

- Table expiration: Leave OFF (or your data auto-deletes)

4. Connect Dataslayer

- Log into Dataslayer BigQuery

- Click "New Transfer"

5. Configure Your Data Source

- Select platform (example: Google Ads) → Authorize

- Choose: accounts, date range, metrics (Cost, Conversions, Clicks), dimensions (Date, Campaign, Device)

6. Set Destination

- Click "Transfer Data" button

- Fill in fields:

- Project: Select your project

- Dataset: Select

ad_data - Table name:

google_ads_performance(or your preference) - Write Mode: Append (critical. Keeps historical data)

7. Choose Frequency

- Schedule: Daily at 3 AM (recommended for fresh data + low cost)

- Manual: Refresh on-demand

- Manually Splitted: For large historical imports (2+ years)

8. Click "Confirm Changes"

Common Errors:

- "BigQuery API not enabled" → Go to APIs & Services → Enable BigQuery API

- "Permission denied" → Use same Google account that created the project

- "Invalid parameters combination" → Use Dataslayer's pre-built templates (some metrics/dimensions don't work together per platform APIs)

For detailed screenshots and troubleshooting, see Dataslayer's official BigQuery setup guide.

Pro tip: Start with your 2 biggest ad platforms. Learn the queries below with manageable data before connecting 6 more platforms.

6 Copy-Paste SQL Queries for Marketing Analytics

Open BigQuery → Click your project → "Compose new query." Paste these, replace YOUR_PROJECT_ID with yours, adjust dates.

Query 1: Total Spend by Channel (Last 30 Days)

SELECT

'Google Ads' AS channel,

SUM(cost) AS total_spend,

SUM(conversions) AS conversions

FROM `YOUR_PROJECT_ID.ad_data.google_ads_performance`

WHERE date >= DATE_SUB(CURRENT_DATE(), INTERVAL 30 DAY)

UNION ALL

SELECT

'Facebook Ads' AS channel,

SUM(spend) AS total_spend,

SUM(conversions) AS conversions

FROM `YOUR_PROJECT_ID.ad_data.facebook_ads_performance`

WHERE date >= DATE_SUB(CURRENT_DATE(), INTERVAL 30 DAY)

ORDER BY total_spend DESC;What it does: Combines multiple platforms into one table. Notice Facebook uses "spend" while Google uses "cost." BigQuery handles the inconsistency.

Query 2: Best Campaigns by ROAS

SELECT

campaign_name,

SUM(cost) AS spent,

SUM(conversion_value) AS revenue,

ROUND(SUM(conversion_value) / NULLIF(SUM(cost), 0), 2) AS roas

FROM `YOUR_PROJECT_ID.ad_data.google_ads_performance`

WHERE date >= '2025-01-01'

AND cost > 100

GROUP BY campaign_name

HAVING roas > 1.5

ORDER BY roas DESC

LIMIT 20;Translation: Show campaigns that spent over $100 and returned at least 1.5x, sorted best first. Change > 1.5 to your profitability threshold.

Query 3: Monthly Performance Trends

SELECT

FORMAT_DATE('%Y-%m', date) AS month,

SUM(clicks) AS clicks,

ROUND(SUM(clicks) / NULLIF(SUM(impressions), 0) * 100, 2) AS ctr,

SUM(cost) AS spend,

ROUND(SUM(cost) / NULLIF(SUM(clicks), 0), 2) AS cpc

FROM `YOUR_PROJECT_ID.ad_data.google_ads_performance`

WHERE date >= '2024-01-01'

GROUP BY month

ORDER BY month DESC;Use case: Spot seasonality. If CTR drops every December, your B2B audience might be mentally checked out for holidays.

Query 4: Customer Acquisition Cost by Source

SELECT

utm_source,

utm_medium,

SUM(cost) AS total_spend,

COUNT(DISTINCT user_id) AS new_customers,

ROUND(SUM(cost) / NULLIF(COUNT(DISTINCT user_id), 0), 2) AS cac

FROM `YOUR_PROJECT_ID.ad_data.conversion_data`

WHERE date >= DATE_SUB(CURRENT_DATE(), INTERVAL 90 DAY)

AND event_type = 'purchase'

GROUP BY utm_source, utm_medium

HAVING new_customers > 5

ORDER BY cac ASC;Why this matters: LinkedIn might cost $120/customer vs Facebook's $35/customer, but if LinkedIn customers have higher LTV, that $120 could be more profitable long-term.

Query 5: Geographic Performance

SELECT

country,

region,

SUM(impressions) AS impressions,

SUM(clicks) AS clicks,

SUM(cost) AS cost,

SUM(conversions) AS conversions,

ROUND(SUM(cost) / NULLIF(SUM(conversions), 0), 2) AS cpa

FROM `YOUR_PROJECT_ID.ad_data.google_ads_performance`

WHERE date >= DATE_SUB(CURRENT_DATE(), INTERVAL 60 DAY)

GROUP BY country, region

HAVING conversions >= 5

ORDER BY conversions DESC;Use case: Geographic analysis often reveals significant differences in conversion rates or CPAs between regions, allowing you to optimize budget allocation.

Query 6: Top Keywords Performance

SELECT

keyword,

SUM(impressions) AS impressions,

SUM(clicks) AS clicks,

SUM(cost) AS cost,

SUM(conversions) AS conversions,

ROUND(SUM(cost) / NULLIF(SUM(conversions), 0), 2) AS cpa,

ROUND(SUM(clicks) / NULLIF(SUM(impressions), 0) * 100, 2) AS ctr

FROM `YOUR_PROJECT_ID.ad_data.google_ads_keywords`

WHERE date >= '2025-01-01'

AND impressions > 1000

GROUP BY keyword

ORDER BY conversions DESC

LIMIT 50;Note: Don't worry about understanding every SQL function. Just copy, change the dates ('2025-01-01' to whatever you need), and run. The query processes the logic for you.

For more complex analysis combining multiple data sources, see our guide on choosing the best data warehouse for marketing.

Connecting BigQuery to Looker Studio (5 Minutes)

SQL tables work, but stakeholders want charts. Looker Studio is Google's free dashboarding tool.

Fast setup:

- Go to Looker Studio → Create → Data Source → BigQuery

- Authorize → Select your project + dataset + table

- Click "Connect"

- Drag and drop: Line chart for trends, bar chart for campaign comparison, scorecards for totals

Performance optimization: Don't connect Looker Studio directly to raw tables with millions of rows. Create a summary view first:

CREATE OR REPLACE VIEW `YOUR_PROJECT_ID.ad_data.dashboard_summary` AS

SELECT

date,

campaign_name,

SUM(cost) AS cost,

SUM(conversions) AS conversions

FROM `YOUR_PROJECT_ID.ad_data.google_ads_performance`

GROUP BY date, campaign_name;

Connect Looker Studio to dashboard_summary instead of the raw table. This cuts load time by 60-80% and reduces query costs because you're processing aggregated data, not scanning millions of rows every dashboard refresh.

For more on optimizing dashboard performance, see why Looker Studio is slow and how to fix it.

When BigQuery Actually Makes Sense

Use BigQuery If You:

✓ Process 50,000+ rows monthly across platforms

✓ Run campaigns on 3+ channels simultaneously

✓ Need to compare Q1 2024 vs Q1 2025 performance

✓ Have 2+ people needing the same data

✓ Calculate custom metrics (LTV, cohort analysis, multi-touch attribution)

Stick with Sheets If You:

✗ Run only Facebook Ads with fewer than 10 active campaigns

✗ Process under 10,000 rows monthly

✗ Monthly ad spend under $3,000

✗ Only need quarterly reports

The Honest Break-Even Point

Based on working with agencies and in-house teams, BigQuery becomes worth it at:

- Monthly ad spend: $10,000+

- Platforms: 3 or more

- Reporting frequency: Weekly minimum

- Team size: 2+ people accessing data

Below these thresholds, Google Sheets is fine. The time saved with BigQuery doesn't justify the learning curve.

BigQuery Cost Reality Check

BigQuery charges for storage (cheap) and queries (also cheap if you're smart about it). Per Google Cloud's official pricing:

Storage Costs

- First 10 GB: Free

- After 10 GB: $0.02 per GB/month

- Data unchanged for 90 days: $0.01 per GB/month (automatic discount)

Most marketing teams store 5-50 GB. Example: 30 GB = $0.40/month.

Query Costs

- First 1 TB processed per month: Free

- After 1 TB: $5 per TB

What "processed" means: If your table has 20 columns but you only SELECT 3 columns, BigQuery charges only for those 3 columns, not the entire table.

Real Cost Examples

Small team (2 platforms, 1 year history):

- Storage: 12 GB = $0.04/month

- Queries: 80 GB processed = Free

- Total: $0.04/month

Agency (5 clients, 3 platforms each):

- Storage: 150 GB = $2.80/month

- Queries: 600 GB processed = Free

- Total: $2.80/month

Large brand (8 platforms, 3 years history):

- Storage: 500 GB = $9.80/month

- Queries: 1.8 TB processed = $4/month

- Total: $13.80/month

How to Keep Costs Low

Filter by date: WHERE date >= '2025-01-01' prevents scanning years of old data

Select specific columns: Never use SELECT * in production. List only the columns you need

Create summary tables: Store pre-aggregated weekly/monthly data for frequent queries

Schedule wisely: Run queries once daily at 3 AM, not 20 times throughout the day

According to Google's cost estimation guide, efficient query patterns keep most marketing teams under $10/month.

FAQ

Can I really use BigQuery without learning SQL?

For 90% of marketing analytics, yes. The 6 queries above handle the most common reports. You only need to understand SQL at a "change the dates and table names" level. When you need something custom, describe what you want to ChatGPT or Claude: "Show campaigns with CPA under $40, grouped by device, sorted by spend." AI tools write SQL surprisingly well for straightforward requests.

How long does BigQuery setup actually take?

Creating a Google Cloud project, enabling the API, and connecting your first data source: 15-20 minutes. Connecting 5 ad platforms with daily automated refreshes: 2-3 hours spread over a week. The hard part isn't technical. It's deciding which metrics matter for your specific business. Using Dataslayer cuts this time by 70% compared to writing custom API scripts for each platform.

What happens if I make a mistake and delete data?

The queries in this guide use SELECT statements. They're read-only and can't delete anything. To actually delete data, you'd need to intentionally type DELETE or DROP commands. Even if you did, BigQuery automatically keeps 7-day backups of all tables. You can restore with: SELECT * FROM 'table' FOR SYSTEM_TIME AS OF '2025-11-28'. The bigger risk is incorrect queries returning wrong insights, not accidentally deleting data.

How much does BigQuery really cost per month?

For most marketing teams: $0-15/month. You get 10 GB storage free and 1 TB query processing free monthly. A typical setup with 3-4 platforms, daily refreshes, and regular reporting uses 100-200 GB in queries (free) and 20-50 GB storage ($0.20-0.80/month). Costs only climb if you're processing 5+ TB monthly or storing years of high-frequency data. Even then, it's $20-40/month, not $200.

When should I use BigQuery instead of Google Sheets?

The breaking point is around 50,000 rows. Below that, Sheets works fine. Above 100,000 rows, Sheets becomes painful. Slow loading, formulas breaking, pivot tables crashing. If you're running campaigns on 3+ platforms and want historical analysis beyond "last 30 days," BigQuery makes sense. If you only need monthly snapshots from a single platform, stick with Sheets and save the learning curve.

Your First Week with BigQuery

Day 1: Create Google Cloud project, enable API, create dataset. Connect your highest-spend platform using Dataslayer's free trial.

Days 2-3: Let data accumulate (you need 2-3 days before queries return meaningful results).

Day 4: Copy Query #1. Edit your Project ID and date range. Run it. Notice the 3-second response time.

Day 5: Try Query #2. Compare the speed to what you'd experience in Sheets with equivalent data.

Week 2: Connect a second platform. Build a basic Looker Studio dashboard using the summary view method.

Week 3: Show a colleague or stakeholder your dashboard. Watch their reaction when they filter by date and it updates instantly.

The learning curve isn't steep. It's just different from Sheets. By week 3, you'll wonder how you ever managed with CSV exports and VLOOKUP formulas.

Want to skip manual CSV exports? Try Dataslayer free for 15 days to connect Google Ads, Facebook Ads, LinkedIn, TikTok, and 45+ platforms directly to BigQuery. No coding required. See our BigQuery integration guide for visual dashboard examples.

.avif)