Most marketing teams waste hours every week exporting Google Ads data to spreadsheets, only to repeat the process when they need updated numbers. BigQuery changes this completely.

Once you set up an automated Google Ads to BigQuery pipeline, your campaign data flows into a data warehouse where it combines with Facebook Ads, GA4, CRM data, and anything else you track. The data warehousing market is growing at 22.6% annually, hitting $16.88 billion by 2030, because marketers finally have tools that don't require engineering degrees.

This guide shows you three ways to automate Google Ads to BigQuery, including which method works best for your situation and the specific pitfalls that cause data mismatches.

Setup Methods Comparison

Why This Matters for Marketing Teams

The Google Ads interface doesn't answer questions like "which keywords drive customers who purchase again within 90 days?" or "how does our Google Ads performance compare to Facebook Ads when we normalize for budget differences?"

These require joining Google Ads with your analytics platform, CRM, and other ad channels. That's impossible in Google Ads but straightforward in BigQuery.

According to a 2025 marketing data report, marketers now pull 100% more data per query compared to 2020, and 70% of North American marketing teams allocate specific budget for data infrastructure. The shift from siloed platforms to unified warehouses is accelerating.

Option 1: BigQuery Data Transfer Service (Google's Native Solution)

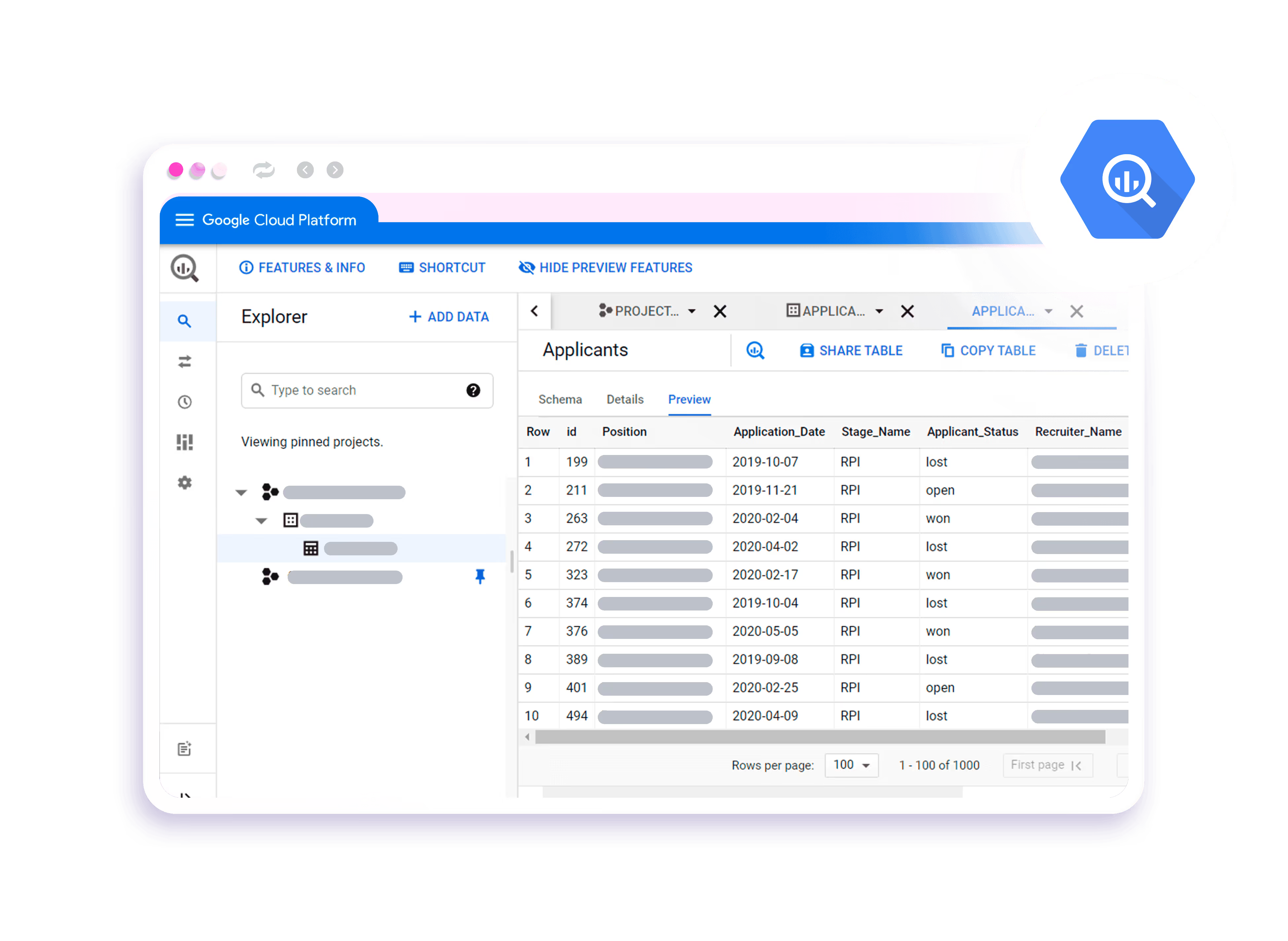

Google provides a built-in connector that automatically transfers Google Ads data to BigQuery.

What You Get

When you set up the Data Transfer Service, Google creates 88 separate tables in your BigQuery dataset:

p_CampaignBasicStats_123456789(campaign performance)p_KeywordBasicStats_123456789(keyword data)p_AdGroupStats_123456789(ad group metrics)- Plus 85 more variations

The Setup Process

- Create a Google Cloud Project with billing enabled

- Navigate to BigQuery → Data Transfers

- Click "Create Transfer" and select Google Ads

- Enter your Customer ID and schedule (daily recommended)

- Select your dataset destination

- Backfill historical data (up to 180 days per request)

Full instructions available in Google's documentation.

The Catches

Table complexity: Campaign names live in p_Campaign_123456789 while metrics live in p_CampaignBasicStats_123456789. Getting a simple campaign report requires joining multiple tables.

The impression discrepancy issue: If you use p_CampaignStats instead of p_CampaignBasicStats, your impression counts will be wrong. Stats tables include a ClickType field that splits impressions by where they occurred (headline, sitelink, etc.), inflating your numbers. This Stack Overflow thread documents the exact problem.

Conversion attribution window: Data Transfer uses a 7-day window. Google Ads UI defaults to 30 days. Conversions appearing 8-30 days after click show up in UI but not BigQuery, making your BigQuery conversion numbers lower.

Multiple accounts: You need separate transfers for each Google Ads account. Managing 10 accounts means 10 configurations and 880 tables.

Pros: Free (storage costs only), direct from Google, updates daily

Cons: Complex table structure, requires SQL knowledge, 180-day historical limit per backfill

Option 2: No-Code Data Integration Platforms

Platforms like Dataslayer handle the complexity by pulling data via Google Ads API and organizing it into analysis-ready tables.

How It Works

Connect your Google Ads accounts → Select metrics/dimensions → Choose update frequency → Data flows to BigQuery automatically.

Instead of 88 tables with complex relationships, you get structured tables matching how you actually want to analyze campaigns.

Setup Example with Dataslayer

- Go to app.dataslayer.ai/bigquery

- Click "New Transfer" → Select "Google Ads" as data source

- Authenticate and choose accounts (can select multiple)

- Pick from pre-built templates (Campaign Performance, Keyword Analysis, etc.) or build custom queries

- Enter your BigQuery project details

- Set refresh schedule (hourly, daily, weekly) if needed

- Import historical data

The platform handles dimension/metric compatibility automatically and warns you about invalid combinations that would cause errors.

Key Advantages

Multi-platform support: Connect Google Ads, Facebook Ads, LinkedIn, TikTok, and 40+ sources to the same BigQuery project. Compare performance across platforms without writing custom API integrations.

Pre-structured data: No joining 88 tables. Your data arrives ready to query.

Historical data: Pull years of history in one import, not just 180 days.

Template libraries: Pre-built configurations for common reports save you from testing dimension/metric combinations.

More details in Dataslayer's BigQuery connector guide.

Pros: No coding required, multi-platform support, unlimited historical data

Cons: Subscription cost (vs. free native connector), relies on API rate limits

Option 3: Manual CSV Exports (When It Makes Sense)

Download a CSV from Google Ads → Upload to BigQuery.

Only use this for: One-time analysis, testing before committing to automation, or if you're analyzing specific date ranges that won't be repeated.

Why it doesn't scale: Takes 20 minutes per export. Different column names when Google changes export formats. No automation means someone must remember to update it.

Google's CSV upload guide explains the mechanics.

Common Pitfalls and Solutions

Pitfall 1: Data Doesn't Match Google Ads UI

Your BigQuery shows 10,523 impressions. Google Ads UI shows 10,498. Why?

Root causes:

- Wrong table: Using Stats tables instead of BasicStats tables

- Timezone: BigQuery stores UTC; UI displays account timezone

- Conversion window: 7 days (BigQuery native) vs 30 days (UI default)

- Data freshness: Transfers complete ~2 AM PST; querying earlier shows incomplete data

Fix: Use BasicStats tables, query consistent timezones, document your conversion window, wait 24 hours before comparing recent data.

Expect 1-3% variance even with perfect setup—data processing differences between platforms cause minor discrepancies.

Pitfall 2: Query Costs Add Up

BigQuery charges $6.25 per TB scanned. Running SELECT * on a 50 GB table costs $0.31. Do that 100 times monthly = $31.

Solutions:

- Partition tables by date: Only scan relevant date ranges

- Select specific columns:

SELECT campaign_name, costvsSELECT * - Use clustered tables: Organize by frequently filtered columns

Small account reality check: 500 MB data + 100 queries/month = ~$0.50 total. Even large accounts rarely exceed $20-30 monthly unless poorly optimized.

Pitfall 3: Duplicate Data

You set up two transfers to the same table. Now metrics are doubled.

Detection query:

SELECT date, campaign_id, COUNT(*) as row_count

FROM `project.dataset.google_ads_campaigns`

GROUP BY date, campaign_id

HAVING row_count > 1

Fix: Use upsert write mode instead of append. Implement unique keys based on your data granularity.

Use Cases

Agency Managing 30+ Client Accounts

Before: 3 hours per client monthly pulling reports

After: Automated pipelines → Looker Studio dashboards → 30 seconds per client

Setup: All client accounts → BigQuery → Looker Studio connector → automated client dashboards

Result: Agency scales from 30 to 50+ clients without hiring additional analysts.

E-commerce: Product-Level ROAS Analysis

Challenge: 500+ products across Google Shopping and Facebook Catalog. Which products are actually profitable after accounting for product costs?

Solution: Google Ads Shopping data + Facebook Catalog data + product cost data → all in BigQuery

Query logic:

SELECT

product_name,

SUM(google_revenue + facebook_revenue) as total_revenue,

SUM(google_cost + facebook_cost) as ad_spend,

SUM(google_revenue + facebook_revenue) - SUM(google_cost + facebook_cost) - (product_cost * units) as net_profit

FROM consolidated_product_data

GROUP BY product_name, product_cost

ORDER BY net_profit DESC

Result: Identified 83 negative-ROAS products. Paused those campaigns. Increased budget on 127 high-profit products. Overall ROAS improved from 3.2x to 4.7x in 60 days.

SaaS: Multi-Touch Attribution

Problem: Free trial users touch LinkedIn Ads, Google Ads, and organic search before converting. Last-click attribution credits only Google Ads, ignoring LinkedIn's role.

Solution: Build custom attribution model in BigQuery combining Google Ads data + LinkedIn Ads data + GA4 session data.

Attribution weights: First touch 30%, last touch 40%, middle touches share 30%.

Discovery: LinkedIn Ads drove few direct conversions but was first touch for 42% of paid customers. Previously considered cutting LinkedIn budget; now investing more.

FAQ

How much does BigQuery storage cost?

$0.02 per GB per month for active storage. A typical Google Ads account with one year of campaign data = 100-500 MB = $0.002-$0.01 monthly. Essentially free.

Query processing is $6.25 per TB scanned. Optimized accounts spend $5-20 monthly. Compare this to analyst time saved (10 hours monthly = $500-1,000 in labor costs).

Can I import historical data beyond what's visible in Google Ads?

Yes. Google Ads interface shows 13 months, but more data exists.

Native Data Transfer Service: Up to 180 days per backfill request. For multiple years, run sequential backfills.

No-code platforms: Usually unlimited. Specify "January 1, 2020 to present" and get all available data in one automated process (takes 30-90 minutes for accounts with several years).

Google retains most campaign data indefinitely, though search query data older than 3-5 years may be incomplete.

How often should I refresh data?

Daily (recommended): Schedule overnight (2-4 AM). Captures finalized data plus conversion updates to previous days.

Hourly: For real-time dashboards. Uses more API quota and costs slightly more.

Weekly: For stable, low-spend accounts reviewed monthly.

Start daily. Adjust based on actual usage patterns.

My impressions don't match Google Ads UI. Why?

Most common cause: Using p_CampaignStats instead of p_CampaignBasicStats. The Stats table includes ClickType field that multiplies impression counts.

Correct approach:

-- ✅ Use this

SELECT SUM(impressions) FROM `dataset.p_CampaignBasicStats_12345`-- ❌ Not this (inflates numbers)

SELECT SUM(impressions) FROM `dataset.p_CampaignStats_12345`

Other causes: Timezone differences (UTC vs account timezone), conversion attribution window (7 vs 30 days), data freshness (querying before transfer completes).

Expect 1-3% variance even with perfect setup.

Do I need SQL knowledge?

For setup: No. Modern platforms offer no-code interfaces for connecting accounts and scheduling transfers.

For analysis: Basic SQL helps. If you understand Excel pivot tables, you can learn SQL basics in a few hours. Mode Analytics SQL School offers free tutorials.

Alternative: Connect BigQuery to Looker Studio. Build dashboards with drag-and-drop. Looker Studio generates SQL automatically in the background.

How do I connect BigQuery to visualization tools?

Looker Studio (free): Add data → BigQuery → select project/dataset/table → build charts

Tableau: Connect to Data → Google BigQuery → authenticate → select tables

Power BI: Get Data → Google BigQuery → enter project ID → load tables

All these tools auto-refresh when BigQuery updates, keeping dashboards current without manual work.

Can I combine Google Ads with other marketing data?

This is the main point of using BigQuery. Example combining Google Ads and Facebook Ads:

SELECT

'Google Ads' as platform,

SUM(cost) as spend,

SUM(conversions) as conversions,

ROUND(SUM(cost) / NULLIF(SUM(conversions), 0), 2) as cpa

FROM google_ads_table

WHERE date >= '2025-10-01'

UNION ALL

SELECT

'Facebook Ads' as platform,

SUM(spend) as spend,

SUM(conversions) as conversions,

ROUND(SUM(spend) / NULLIF(SUM(conversions), 0), 2) as cpa

FROM facebook_ads_table

WHERE date >= '2025-10-01'

One query showing cross-platform performance. Update daily without touching the code.

For more on combining platforms, see Dataslayer's guide on choosing the best cloud data warehouse for marketing.

Getting Started

If you export Google Ads data more than once weekly, automation pays for itself immediately.

Technical teams: Set up Google's native BigQuery Data Transfer Service. Budget 1-2 hours for initial configuration. Expect ongoing time managing 88 tables and writing SQL.

Non-technical teams: Use platforms that handle complexity automatically. Set up takes 10 minutes and doesn't require SQL knowledge.

Start small: Pick your highest-spend account. Set up one automated transfer. Verify data matches expectations. Expand to additional accounts and platforms.

The marketing teams seeing the biggest wins automated data collection six months ago and have been making faster decisions ever since.

Want to automate Google Ads, Facebook Ads, LinkedIn, and 40+ other platforms to BigQuery? Try Dataslayer free for 15 days - no credit card required.