ChatGPT has quickly become a go-to tool for meaningful conversations. Its ability to understand complex queries and produce detailed, thoughtful responses has changed the way we work and create. But for many users, its real strength goes beyond just conversation. With Plugins and Custom GPTs, ChatGPT can interact with the outside world, everything from pulling in real-time stock market data to organizing your schedule.

While these features are powerful, they come with limitations, mainly because they operate within a closed, proprietary system. For developers and businesses, this presents a challenge: vendor lock-in. If you want to integrate a different large language model (LLM), you'd essentially have to rebuild everything from scratch.

Enter the Model Context Protocol (MCP), a solution that could change everything. Developed by the team at Anthropic, the creators of Claude, the MCP is an open-source framework that aims to standardize how LLMs connect with external tools. While it's not an official OpenAI feature, understanding the MCP is key because it opens the door to a more open, flexible, and powerful future for creating AI agents with ChatGPT. It’s the tool that makes true LLM interoperability possible.

In this article, we’ll dive into why the MCP is so important for anyone using ChatGPT, compare it to OpenAI’s current tools, and explain how it could completely change how you approach workflow automation and data management.

Understanding ChatGPT Tools and Integrations

Before we can see how the Model Context Protocol (MCP) adds value, it’s important to first understand the current state of ChatGPT API integration.

- ChatGPT Plugins (Legacy): When plugins were first introduced, they became the primary way to link ChatGPT with external services. You'd install a plugin from a marketplace to enable the model to perform specific tasks. While this approach worked, it was far from ideal. To connect to different apps, say a CRM and a calendar, you needed separate plugins from different developers, making the whole system feel disjointed.

- Custom GPTs & Actions (Current): OpenAI's recent update, Custom GPTs, improved things significantly. Now, developers can create "Actions" directly within a specific GPT, offering a smoother, more integrated experience. For users, this is a great step forward. But for developers, the main limitation still exists: these integrations are locked to that specific GPT and must be built on OpenAI’s platform. So, if you want your tool to work with other language models, like Anthropic's Claude or Google's Gemini, you’d have to start from scratch.

While this model works well for users, it creates a "walled garden" for developers. Your work is tied to a single ecosystem, which limits your ability to scale, move data, and have the freedom to develop across different platforms.

How the MCP Solves the Fragmentation Problem in LLM Integration

The digital world is built on open standards. Think of HTTP for the web or SMTP for email; these protocols let different systems talk to each other without issue. In the realm of large language models (LLMs), this kind of standard was missing until the Model Context Protocol (MCP) came along.

The MCP tackles fragmentation by introducing a universal standard. Instead of developing a custom "action" for every LLM, a developer only needs to create one MCP Server that offers a set of tools. Any LLM that understands the MCP can connect to that server and use its tools, no matter who created the model.

This shift is key. It takes the focus off building model-specific integrations and instead allows for the creation of one universal bridge. For developers and businesses, this means:

- Reduced Development Time: You build one integration layer, and it works with any LLM that supports the protocol.

- Enhanced Control: You keep full ownership and control over the MCP Server and your data, rather than relying on third-party marketplaces.

- Future-Proofing: Your AI applications won’t be tied to a single vendor’s roadmap. If a new, more powerful LLM comes out, you can point your existing MCP Server to it, and your applications will keep running smoothly.

Comparing MCP vs. Plugins & Custom GPTs: Key Differences

To truly grasp the value of the Model Context Protocol (MCP), it’s helpful to compare it directly with OpenAI’s existing tools.

This comparison highlights the key differences. While OpenAI’s solutions focus on ease of use and a streamlined experience within their ecosystem, the MCP emphasizes flexibility and control, changing the landscape for developers looking for more freedom in their integrations.

How to Use the MCP with ChatGPT

The Model Context Protocol (MCP) stands out because of its flexibility. It isn’t a one-size-fits-all solution, but rather a protocol that can be adapted in different ways based on your needs. Since its introduction in late 2024 and full implementation in September 2025, the MCP has provided two main approaches for integrating with ChatGPT.

1. Using a Custom GPT

This option offers the most polished and user-friendly experience. It's perfect for developers and businesses who want to create a custom, branded agent designed for a specific task.

The Workflow:

- Developer Action: The developer starts by setting up and hosting a secure MCP server at a public URL (or uses a third-party provider). This server holds the logic and API keys for any external tools the agent will use.

- GPT Configuration: The developer creates a Custom GPT on OpenAI’s platform. In the GPT’s "Actions" settings, they paste the URL of their MCP server and define the tools it connects to. This setup is done once, and the end-user never sees it.

- End-User Experience: The user simply interacts with the Custom GPT in a normal chat. When a prompt requires external action, like "Summarize our sales data from Q3," ChatGPT recognizes that it needs to call one of the predefined actions. The MCP server processes the request, sends the results back to the GPT, which then generates the final answer.

2. A Normal Conversation

This workflow is designed for power users, admins, or developers who need quick access to a tool without creating a full agent. It’s a key feature of the full MCP implementation, rolled out in September 2025.

The Workflow:

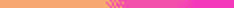

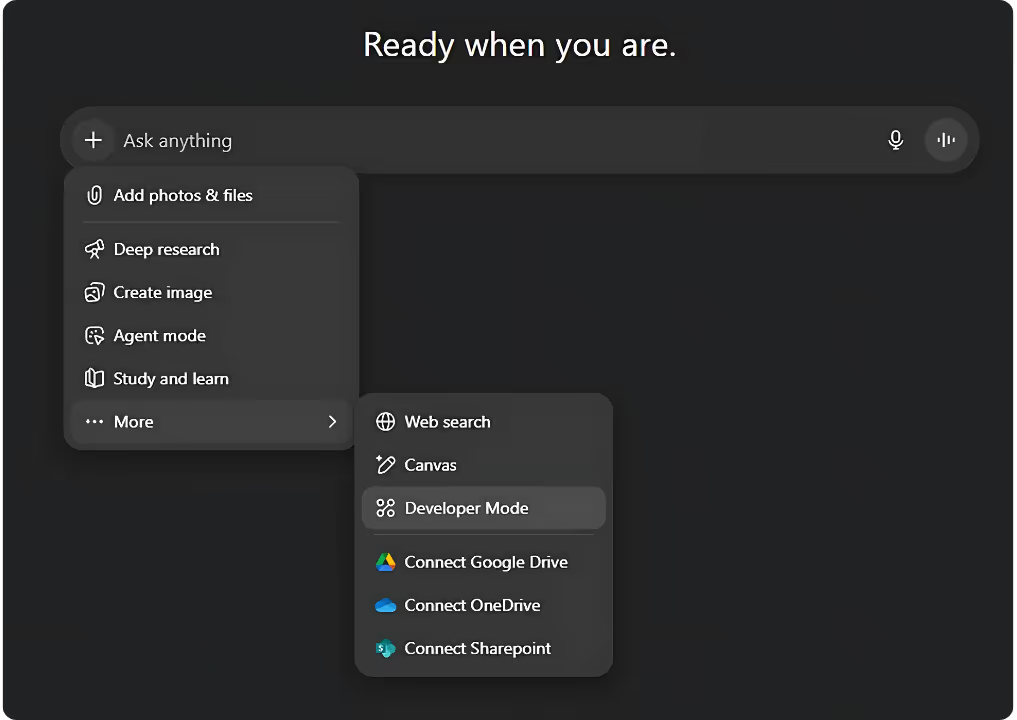

- Initial Setup: The user (or system administrator) enables "Developer Mode" in ChatGPT settings. From there, they add the external MCP server by pasting its URL. This is a manual, user-driven action that gives permission to the specific server.

- User Action: In a standard chat, the user can now make a prompt that directly references a tool connected to the server. For instance, if a financial data server is connected, a user might type, "What’s the current stock price for APPL?"

- Real-Time Connection: ChatGPT recognizes the user's intent to use an external tool, makes a real-time call to the MCP server, retrieves the data, and displays the result in the chat. This method is all about on-demand, user-initiated actions without needing a pre-set agent.

Why a Trusted URL Matters for Security

In both workflows, one key factor is the trustworthiness of the MCP URL. By connecting an MCP server, you’re allowing the LLM to interact with an external system.

A malicious or untrusted MCP server could potentially execute harmful commands or access sensitive data. It’s vital to only connect to MCP servers from reliable, well-documented sources. A trusted MCP server should:

- Be hosted on a secure domain with HTTPS.

- Provide a clear, publicly available specification of the tools it exposes.

- Follow security best practices, including strong authentication and robust access controls.

Content Auditing Across Platforms: How MCP Makes It Easier

The move from a proprietary plugin model to an open-standard protocol opens up a world of new possibilities for professionals in various fields.

- Streamlined Marketing Workflows: Picture having one AI agent that can handle campaigns across multiple platforms. It could analyze ad performance on Google Ads, pull customer information from Salesforce, and automatically update your content calendar in a CMS, all through a single, standardized protocol. This would allow for smooth, end-to-end automation, saving time and effort.

- Cross-Platform Content Auditing: A content strategist could use an MCP-powered ChatGPT agent to pull data from an internal knowledge base via a database API and cross-reference it with real-time keyword trends from an SEO tool. The agent could then create a detailed content gap analysis, providing valuable insights that are both accurate and up-to-date.

- Data Control and Security: For enterprises, protecting data is crucial. By hosting their own MCP Server, companies can keep sensitive data under their control, ensuring it doesn’t pass through third-party platforms. Additionally, if they decide to switch to a different LLM down the road, migrating the entire automation workflow can be done with minimal hassle.

Conclusion

The Model Context Protocol isn’t just another alternative to OpenAI's plugin system; it’s an important move towards a more flexible and open future for AI. While OpenAI's tools are incredibly user-friendly, the MCP offers developers and businesses a way to take back control, avoid being locked into one vendor, and build AI systems that can truly work together.

The future of AI will be shaped not by the most powerful model, but by the most effective and open ecosystem. By exploring the MCP, you’re not just adopting a new technology; you’re supporting the idea of creating systems built on open standards, which could lead to more creative and innovative solutions down the road.

For more insights on how the Model Context Protocol (MCP) is transforming AI, check out our articles on how MCP is unlocking Claude's potential for SEO and marketing automation, how it boosts Mistral’s interoperable AI agents, and a detailed comparison of Claude, ChatGPT, and Mistral: Which LLM is best for your AI agent?