Artificial Intelligence (AI) has always aimed to do more than just hold a conversation. The goal is to create systems that can understand, reason, and interact with the world, whether that’s scheduling meetings, analyzing live market data, or crafting hyper-targeted marketing campaigns. The rise of Large Language Models (LLMs) like ChatGPT, Claude, and Mistral has made this vision more attainable, but connecting them to external tools has remained a fragmented and often proprietary challenge.

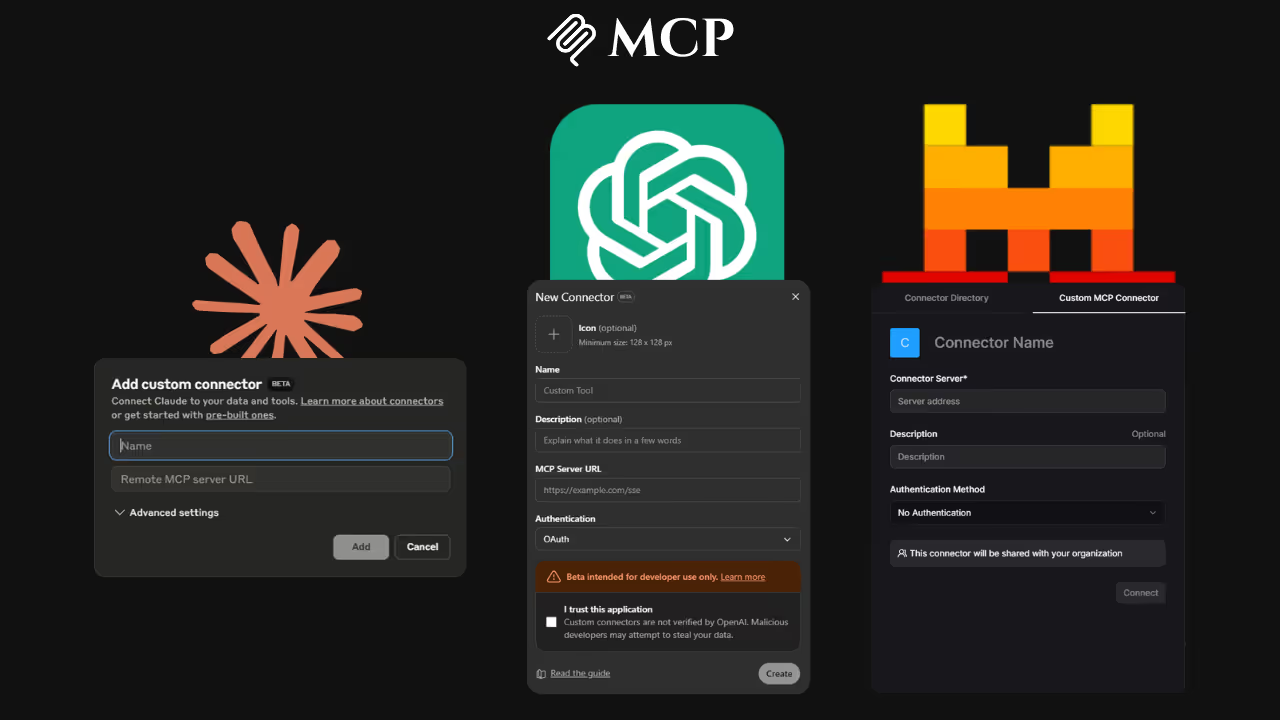

That’s where the Model Context Protocol (MCP) comes in. This open-source standard, which was first introduced by Anthropic (the creators of Claude), is quickly becoming the go-to framework for linking LLMs with external tools and services. It gives developers a common language to build AI agents that can perform real-world tasks smoothly.

Although the MCP sets a foundation, how each major LLM provider uses and adapts it varies, reflecting different philosophies, strengths, and ideal applications. For those working in fields like marketing, SEO, content strategy, and development, understanding these differences is essential. This article will offer a detailed comparison of how the MCP works with ChatGPT, Claude, and Mistral, helping you choose the platform that best fits your goals and technical needs.

The Common Thread: Understanding the Model Context Protocol (MCP)

Before we dive into the details of how each LLM handles the MCP, let’s take a moment to understand what it’s all about. Simply put, the MCP is an open standard that makes it easier for LLMs to communicate with external tools. Think of it as a universal API that ensures the way you instruct an LLM to perform actions, like "search the web," "send an email," or "update a database", is always consistent, no matter which LLM you're using.

The MCP works on a client-server model:

- An MCP Server offers a set of tools (such as an API to your CRM, a web search function, or an email sender) that can be accessed by any connected LLM.

- The LLM, acting as the client, receives a user’s prompt, decides which tool to use, formats the request according to the MCP, and sends it to the MCP Server.

- The MCP Server processes the request and sends the results back to the LLM.

This standardization makes it much easier to build powerful AI agents. However, the choice of LLM still plays a big role in shaping the development process, the tools available, and the overall results you can expect.

How the Model Context Protocol (MCP) Works Across Top LLMs

Although the Model Context Protocol offers a standard framework, each LLM, Claude, ChatGPT, and Mistral, has its own unique approach to implementing it, based on its core strengths and target users.

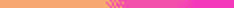

1. ChatGPT: Ecosystem Integration at Scale

ChatGPT’s adoption of the Model Context Protocol is a strategic step, reflecting OpenAI’s goal of expanding its vast platform while addressing the need for a more standardized approach to tool integration beyond its own plugins and Custom GPT actions.

- Philosophy: ChatGPT is a widely adopted, highly adaptable AI platform used across many sectors for its robust general capabilities and deep knowledge base. ChatGPT's approach is all about ecosystem integration. With a massive user base and developer community already in place, the MCP serves as a bridge that connects this ecosystem with external tools in a more standardized, future-proof manner. It's a way to enhance the platform by using an open standard.

- Key Advantages:

- Access to a Huge User Base: For developers, integrating tools through MCP into Custom GPTs offers unmatched access to ChatGPT’s large user base. This can drive visibility and adoption.

- Familiar Developer Environment: Developers who are already familiar with the Custom GPTs and how they configure actions will find it easy to extend these capabilities by adding MCP servers, supported by OpenAI's extensive tools and documentation.

- Cross-Ecosystem Connectivity: The MCP allows ChatGPT to connect with tools that might also be used by other LLMs, paving the way for greater interoperability while taking full advantage of ChatGPT's powerful platform.

- Challenges in Comparison: While well-supported, the coexistence of MCP with OpenAI’s own "Actions" and "Plugins" can sometimes create a more fragmented integration process compared to Claude's unified approach. Developers will need to choose between proprietary actions and MCP, depending on what works best for their needs within ChatGPT.

- Ideal for: Projects targeting a broad consumer or professional audience that already uses ChatGPT or those looking to integrate with popular third-party tools while maintaining a seamless user experience within the ChatGPT interface.

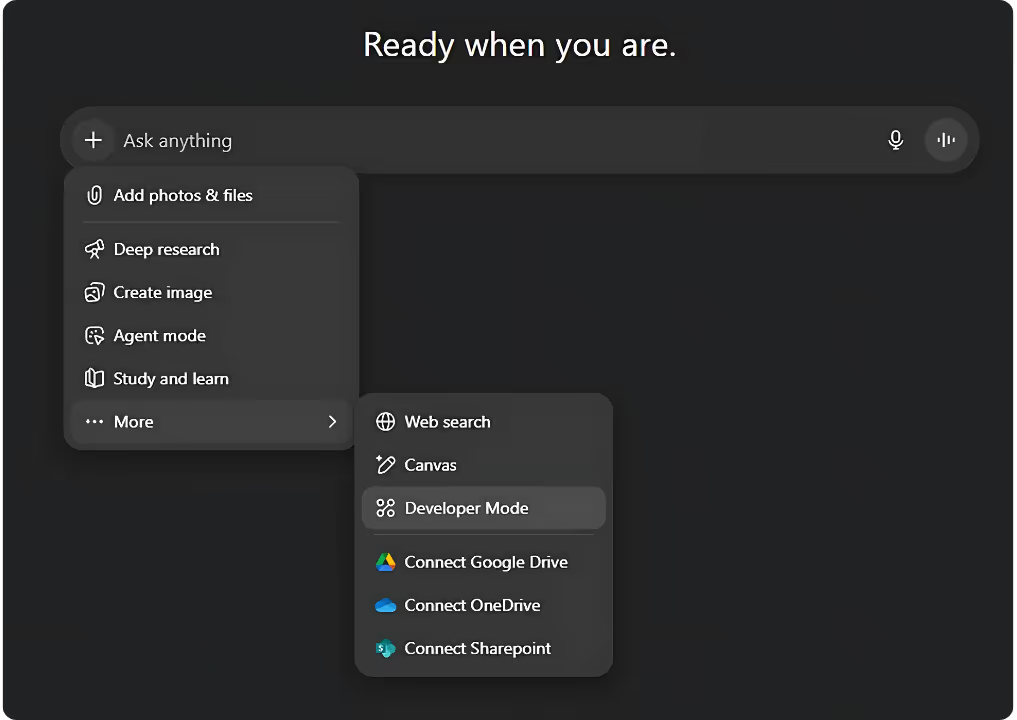

2. Claude: Seamless Integration at Its Core

For Claude, the Model Context Protocol is more than just an added feature; it’s woven into the very fabric of its design. Since Anthropic created the MCP, its integration with Claude is built from the ground up, making it an essential and highly refined part of the system.

- Philosophy: Claude is an advanced, developer-oriented AI designed for technical users who need robust artifact creation and complex problem-solving capabilities. Claude’s integration with the MCP feels entirely natural. The protocol blends smoothly with the model, creating a cohesive and dependable experience. The focus is on delivering a secure, powerful AI agent solution that works seamlessly within the Anthropic ecosystem.

- Key Advantages:

- Unmatched Reliability: Because the integration is first-party, the relationship between Claude and the MCP is often the most stable and efficient, with minimal friction for consistent performance.

- Refined Experience: Developers within the Claude environment benefit from continuous improvements and optimization from Anthropic, ensuring a smooth development process.

- Built-in Security: Since Anthropic developed the protocol, security features are deeply embedded into the system, offering solid protections for safe tool execution.

- Challenges in Comparison: While Claude excels in its own ecosystem, its deep integration may make it less flexible for projects that need to switch between different LLM providers frequently. It’s a premium experience with less room for adaptability.

- Best for: Projects that require high reliability, consistency, and a polished agent experience, particularly for users already committed to the Anthropic ecosystem or those that need top-notch security in a managed environment.

3. Mistral: Open-Source Interoperability at Its Best

Mistral’s philosophy of open-weight models, developer control, and efficiency aligns perfectly with the Model Context Protocol. For Mistral, the MCP isn’t just an add-on, it’s a core part of building a truly open and flexible AI ecosystem.

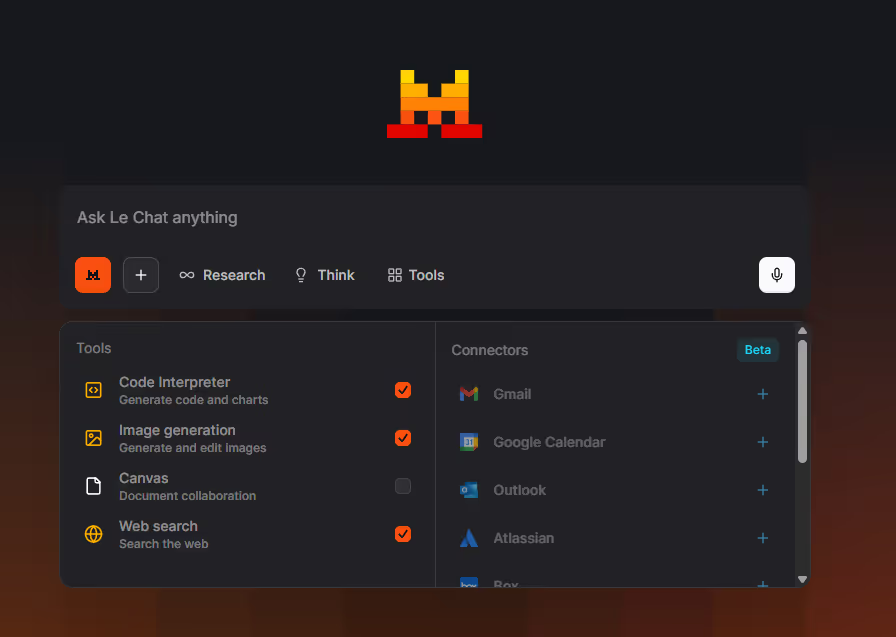

- Philosophy: Mistral combines open interoperability with a privacy and security-first approach. Its models are hosted in EU data centers under ISO 27001-certified setups to maximize data protection. Mistral’s approach to the MCP is centered on empowering developers with control. The goal is to enable the creation of flexible, future-proof AI agents without the constraints of vendor lock-in, using Mistral’s efficient models.

- Key Advantages:

- Maximum Portability: The combination of Mistral’s open-weight models and the open MCP standard makes your AI agent highly portable. You can easily switch between different Mistral models, or even other MCP-compatible LLMs, without needing to overhaul your tool integrations.

- Enhanced Data Control: For organizations that need to keep sensitive data on-site, deploying Mistral models locally with an internal MCP server provides complete control over data privacy. This way, data stays within your infrastructure.

- Cost Efficiency & Flexibility: Mistral’s efficient models, paired with MCP’s standardized integration, offer potential cost savings and greater flexibility in deployment, whether in the cloud, on-premise, or at the edge.

- Community-Driven Development: The open-source model encourages community collaboration, allowing developers to build and share MCP tools that contribute to a richer ecosystem for everyone.

- Challenges in Comparison: This approach typically requires more technical expertise for setting up and managing both the LLM and MCP server. It doesn’t offer the same plug-and-play experience as some proprietary platforms.

- Ideal for: Projects with strict privacy needs, developers who want complete control over their AI stack, or organizations that prioritize long-term portability and cost-efficiency over ready-to-use, pre-packaged solutions.

Comparing LLMs: How They Align with the Model Context Protocol (MCP)

To break down the differences more clearly, let's take a closer look at how each LLM aligns with the MCP:

Choosing the Right LLM for Your AI Agent

The "best" option really depends on what you're aiming to achieve with your project.

- Choose ChatGPT if: Your goal is to reach a wide audience with your AI agent or to integrate smoothly with popular existing tools. By tapping into ChatGPT's large user base and developer-friendly ecosystem, you can easily build solutions that are accessible and scalable.

- Choose Claude if: You need a highly reliable, secure, and deeply integrated agent experience within a managed cloud environment. If you're comfortable working within Anthropic’s ecosystem and trust their native implementation of the Model Context Protocol (MCP), this is the ideal choice for you.

- Choose Mistral if: Your project requires ultimate portability, control over data privacy, or cost-efficiency with on-premise deployment. If you’re a developer or enterprise that values open-weight models and needs flexibility to switch between different LLMs without major rework, Mistral is the way to go.

Conclusion

The Model Context Protocol (MCP) marks a crucial step forward in creating a more unified and effective future for AI agents. It establishes a common framework that allows powerful LLMs to do more than just hold a conversation; they can now truly interact with the digital world.

Looking at the differences between ChatGPT, Claude, and Mistral, it’s clear that while the MCP standardizes how things are done, each provider’s core philosophy still influences how and why it’s implemented. Whether you need seamless integration, broad user reach, or maximum flexibility and control, the MCP offers a solid foundation for each approach. The real breakthrough in AI goes beyond creating smarter models; it’s about developing models that are open, interoperable, and capable of driving new levels of automation and insight across all industries.

To dive deeper into how the Model Context Protocol (MCP) is transforming AI agents across different platforms, check out our articles on how MCP is unlocking Claude’s potential for SEO and marketing automation, how it’s changing ChatGPT beyond plugins, and how it enhances Mistral’s interoperable AI agents.