Large Language Models (LLMs) have become key technologies. However, there's often a catch: these models are typically locked within proprietary systems. That's where Mistral AI steps in, challenging this norm. Known for creating efficient, high-performance models, Mistral focuses on offering developers and businesses an alternative that emphasizes transparency, control, and openness.

But even the most powerful LLMs, whether open-source or proprietary, struggle with one big issue: how to securely and reliably interact with the outside world. This is exactly where the Model Context Protocol (MCP) plays a crucial role for Mistral. Originally developed by Anthropic, the MCP is an open standard that serves as the essential bridge, turning Mistral's models into not just reasoning tools but actionable, dynamic AI agents.

For those in marketing, SEO, or content strategy, it's important to grasp how Mistral’s open approach aligns with the MCP’s standardized framework. This combination opens up the possibility of creating AI solutions that are not only powerful and efficient but also adaptable, private, and prepared for the future. This article will dive into why the MCP is the ideal match for Mistral's vision, showcasing its unique benefits and real-world applications.

Why Mistral's Philosophy Stands Out: Power, Efficiency, and Openness

Before we dive into the Model Context Protocol, it's important to understand what makes Mistral AI stand out in a market that’s largely dominated by the bigger tech players. Mistral has a refreshing approach to LLMs, especially for developers and businesses who want more control and flexibility.

- Efficiency at Its Core: Mistral’s models are known for offering top-tier performance while keeping their footprint small, especially when compared to larger, closed-source models. This translates to lower computational costs, quicker processing times, and the flexibility to deploy AI closer to where the data lives, whether on-premises or even on edge devices. For businesses, this means AI that’s both cost-effective and quick to respond.

- Open-Weight Models: One of the standout features of Mistral is its commitment to open-weight models. Unlike closed-source LLMs, where the inner workings are hidden, Mistral allows its models to be downloaded, examined, and fine-tuned by anyone. This level of transparency not only builds trust but also opens the door to customization, encouraging a community of developers to contribute and innovate.

- A Developer-Friendly Mindset: Mistral’s tools and documentation are designed with developers in mind, offering flexibility, control, and easy access. This approach contrasts with more restrictive, platform-dependent ecosystems, making Mistral a perfect choice for businesses that need customization and care about data sovereignty.

Mistral’s focus on efficiency and openness makes it an appealing option for anyone looking for powerful AI without the limitations of proprietary systems. But even with open-source models, there’s still the challenge of interacting with various external tools, and that’s where the Model Context Protocol comes into play.

How MCP Unlocks the Full Potential of Mistral's Agent-Based Future

Mistral’s models are great at reasoning and often come with strong "function-calling" features, meaning they can suggest actions to take. However, that alone isn’t enough for real interoperability. Without a universal protocol, developers would need to create custom solutions to connect Mistral to external systems like CRMs, email APIs, or databases. This would lead to inconsistency and wasted effort.

That’s where the Model Context Protocol (MCP) steps in. It fills the gap by providing a standardized framework for defining and using tools, enabling Mistral to connect smoothly with various external systems. This is more than just a technical detail; it’s a strategic partnership that enhances Mistral’s unique advantages:

- Fostering Openness with Standards: Mistral brings the open-source intelligence, while the MCP offers the open-source communication layer that connects that intelligence. Together, they form a genuinely open ecosystem, where developers aren’t tied to proprietary integration methods. This encourages collaboration, as any compliant LLM can use tools built for the MCP.

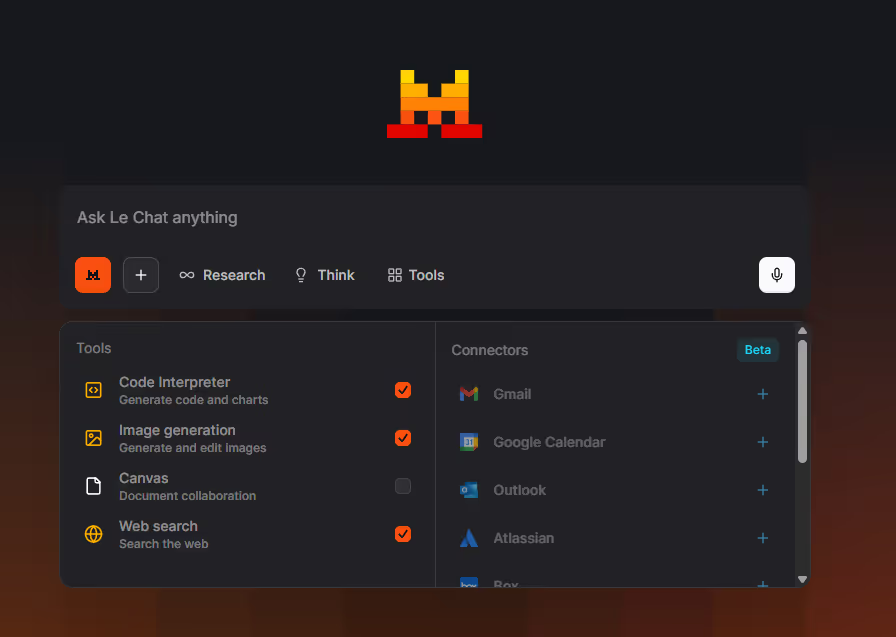

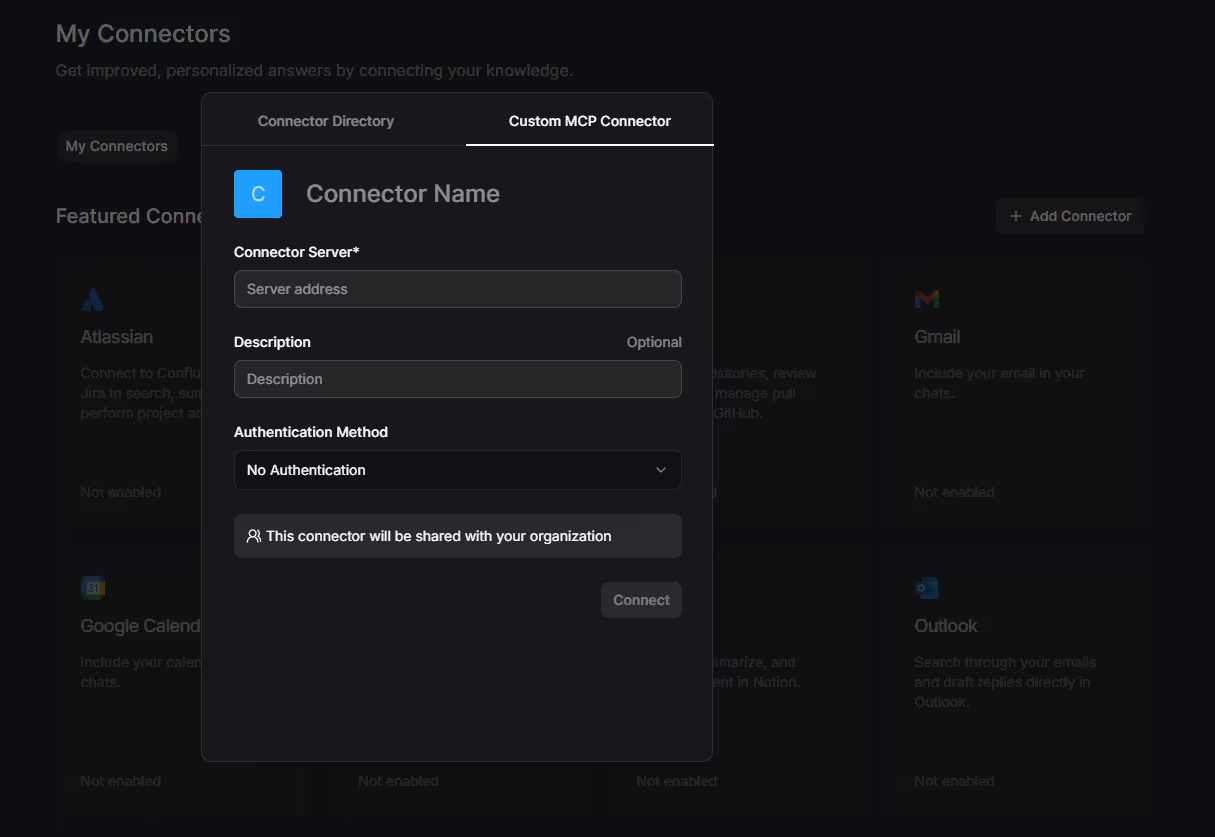

- Simplifying Agent Development: For developers using Mistral’s models, the MCP streamlines the creation of AI agents. Rather than building custom integrations for each API, developers can expose their tools through a single MCP server, which Mistral can easily understand and use. This cuts down on both development time and complexity.

- Ensuring Portability: This is a major benefit. An agent built with Mistral and an MCP server is naturally more portable. If a new, more powerful Mistral model comes out, or if a developer wants to switch to a different MCP-compliant LLM, the integration logic (the MCP server) stays the same.

The combination of Mistral and the MCP is powerful. Mistral offers a highly efficient, transparent LLM, while the MCP provides the open, standardized framework needed to link that intelligence with real-world systems. Together, they offer a strong alternative to proprietary ecosystems, focusing on flexibility and giving developers more control.

Exploring Real-World Use Cases with Mistral and MCP

When you combine Mistral’s capabilities with the Model Context Protocol (MCP), you unlock a range of powerful and unique applications, especially for organizations focused on data control, cost-effectiveness, and flexibility in deployment.

Private, On-Premise AI for Sensitive Data

For industries like finance, healthcare, and legal, where data privacy and compliance are critical, running LLMs on-premise is often a must.

Scenario: A financial institution needs to use AI to analyze internal risk reports or respond to customer queries without sending any data to external cloud services.

Workflow:

- The institution installs a Mistral model (like Mistral 7B or 8x7B) directly onto its internal servers.

- An MCP server is set up within the same secure environment, connecting to the company’s proprietary databases, legacy systems, and internal communication tools, all within its firewall.

- Using the MCP, a Mistral-powered agent can process queries, generate compliance reports, summarize sensitive documents, and interact with internal systems—all while keeping the data securely within the company’s network.

Unique Advantage: This setup provides an AI agent that is not only powerful but also fully private and secure, meeting the strict regulatory standards that cloud-based LLMs may struggle to meet.

Flexible and Easily Evolving AI Applications

For developers and startups, the ability to adapt and scale AI solutions without major overhauls gives them a significant edge in the market.

Scenario: A startup develops an AI assistant for project management, helping teams organize tasks, summarize meetings, and track progress.

Workflow:

- The developer sets up an MCP server that connects with project management tools (like Jira or Asana) and communication platforms (like Slack).

- Initially, for cost and speed, they use a smaller, efficient Mistral model (such as Mistral 7B) running either locally or on a light cloud instance. The MCP server allows the assistant to test its AI features.

- As the application grows or if more processing power is needed, the developer can easily switch to a more powerful Mistral model, like Mistral Large, or even switch to a different MCP-compliant LLM, all while the MCP server and its tool integrations remain unchanged.

Unique Advantage: This approach provides unmatched portability. Since the core logic for interacting with external tools is separate from the LLM, businesses can choose the best model for their needs at any time, without having to deal with costly re-engineering or re-integration.

How Mistral and MCP’s Combo Gives You a Strategic Advantage

The real strength of combining Mistral's open-weight models with the Model Context Protocol isn’t just about technical performance; it’s about the strategic advantage of interoperability. In a time when AI is evolving quickly, having flexibility is essential.

Unlike users of proprietary LLMs like ChatGPT and Claude, who are often tied to the specific features, pricing models, and long-term plans of their platforms, the Mistral-MCP combination offers a more open approach:

- Freedom of Choice: Organizations are no longer dependent on a single LLM vendor. They can select the model that best fits their performance, cost, or privacy requirements from a growing open-source ecosystem, with the confidence that their existing tool integrations will continue to work seamlessly.

- Increased Control: By hosting their own Mistral models and MCP servers, businesses have full control over their data, AI logic, and deployment. This kind of autonomy is crucial for maintaining compliance, security, and strategic independence.

- Community-Driven Innovation: The openness of both Mistral and MCP creates a thriving community. This leads to a rapid exchange of shared tools, best practices, and new ideas, allowing innovation to happen at a pace that proprietary ecosystems often can’t match.

Conclusion

The Model Context Protocol is much more than just a technical detail; it’s a driving force that makes the vision of open, efficient, and powerful AI a reality. For Mistral AI, the MCP is the key that lets its high-performance, open-weight models go beyond reasoning and actually take action in the real world, interacting seamlessly with a variety of external systems.

For professionals in marketing, SEO, and content strategy, this partnership offers a strong alternative. It opens the door to creating AI agents that are well-integrated into existing workflows, capable of managing sensitive data on-premise, and ready to adapt to future technological changes. The real AI revolution won’t just come from building bigger, more complex models; it will come from making it easier and more standardized to connect these models to the world. Mistral and the MCP are leading the charge, not just with their products but by establishing the open standards that will shape the next generation of intelligent applications.

If you're interested in learning more, check out our other articles on how the Model Context Protocol (MCP) is transforming the AI landscape: How MCP is Unlocking Claude’s Potential for SEO and Marketing Automation, Beyond Plugins: How the Model Context Protocol (MCP) Is Changing ChatGPT, and MCP in Claude vs. ChatGPT vs. Mistral: Which LLM is Best for Your AI Agent?.