Keeping your data inside Google Analytics, Meta, or other ad platform dashboards can feel convenient, but it creates real headaches: short retention windows, API rate limits that block full exports, sudden schema changes, and vendor lock-in.

Moving those feeds into PostgreSQL centralizes every source under your control, with scheduled syncs that capture history and support backfills, versioned models so transformations are auditable and reversible, and BI-ready tables designed for analysis. That setup reduces report breakages, speeds up queries and dashboards, and gives you tighter governance and more independence from vendor whims.

Why "owning" your data beats leaving it in vendor clouds

Too often teams treat vendor dashboards as the final word on their data. That’s fine for quick checks, but it makes deep analysis, auditing, and cross-source joins harder over time. Moving those feeds into PostgreSQL gives you a single, consistent place to query and manage your data. Below are the practical benefits you get from doing that:

- Control & continuity: Vendors change UIs, metrics, and retention rules. In PostgreSQL you define the schema and keep the full history, so you are less likely to be surprised when a platform updates or removes a field.

- Full history & auditability: Ad platforms often limit lookback windows. A database stores time-series data indefinitely, which makes year-over-year, seasonality, and lift analyses possible. Add simple audit columns like loaded_at, source_file, and checksum for traceability.

- Combine everything into a true single source of truth: Bring GA4 sessions, ad spend, CRM revenue, call center logs, and offline events into one place. That level of cross-source blending is hard to do reliably inside any single vendor tool.

- Avoid vendor lock-in: If a platform drops a metric or you decide to switch tools, your historical tables stay intact. That lowers migration cost and gives you more options going forward.

- Fewer data silos, faster answers: Analysts and marketers query one warehouse, not a tangle of dashboards and CSVs. Fewer handoffs means less time lost to manual joins and reformatting.

- Performance & flexibility for BI: Indexing, partitioning, and materialized views keep reports responsive. Serve the same curated tables to Looker Studio, Power BI, or Tableau without repeatedly exporting data.

- Compliance & governance — own the keys: You can enforce your own retention rules, tokenize PII, and manage access with roles and schemas. Centralized logging also makes audits simpler.

- Cost predictability: Repeated API pulls and manual exports add up. ELT into PostgreSQL lets you use incremental loads and standardized models that you optimize once.

- Experimentation without vendor limits: Run attribution models, build MMM features, or test cohort logic in SQL or Python on your tables, rather than being constrained by a vendor’s UI or metric definitions.

Side-by-side: vendor dashboards vs your PostgreSQL

Let’s see a compact, practical comparison of the trade-offs teams run into when they keep data in platform UIs versus when they centralize it in PostgreSQL via Dataslayer, so you can quickly spot which pain points matter for your situation.

Step-by-step: load into PostgreSQL with Dataslayer

Now that we’ve weighed both options, let’s put it to work loading your marketing data into PostgreSQL with Dataslayer. Use the checklist below to pipe your ad and analytics sources into your database. It follows a single flow from preparation to verification, so you can go straight through without missing a step.

What you’ll need

- A PostgreSQL database, cloud or self-hosted.

- DB credentials: host, port, database, user, password, and optionally schema.

- Network access configured so Dataslayer can reach your database. If you block inbound traffic, allowlist Dataslayer connector IPs.

- A Dataslayer account and authenticated connector accounts (GA4, Google Ads, Meta Ads, LinkedIn Ads, CRM, etc.).

Steps

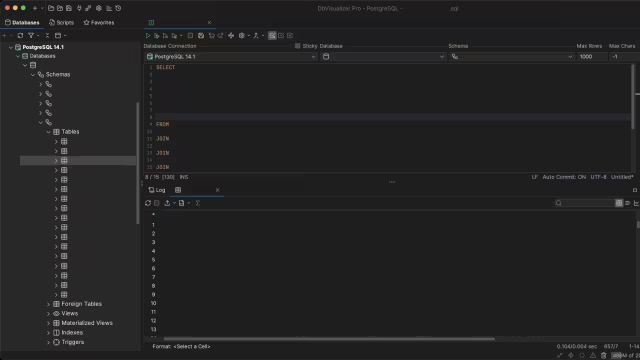

- Authenticate and pick the properties/accounts

Log into Dataslayer and make sure the connectors you need are authenticated and authorized, for example GA4 and Google Ads. Confirm you can see the accounts you want to pull. - Select the database destination

Choose your PostgreSQL destination and start a new transfer. - Configure the origin

Pick the connector, the specific accounts, and the metrics or dimensions you want. Set the date range, filters, and sort order if needed. - Connect the database

Click connect to database and enter the required fields:

- Database type

- Host

- Port

- User

- Password

- Database

- Configure the destination

Fill in the destination settings:

- Transfer Name: the name you will use to identify the transfer in Dataslayer

- Timezone: set the timezone for the export.

- Table Name: the name that will appear in your database, for example ga4_sessions_daily. Tip: keep naming consistent using the pattern source_layer_grain, for example google_ads_campaign_daily or meta_ads_adset_hourly.

- Write mode: choose Append (adds new rows; recommended), Replace (overwrites existing data; use with caution), or Upsert (updates matching rows and inserts new ones).

- Sync frequency: choose Scheduled (runs automatically), Manual (one-off when you trigger it), or Manually split (for large backfills; breaks the job into smaller chunks to avoid timeouts/failures).

- Save

Save the transfer configuration so it can run on schedule or be triggered manually.

1.avif)

- Monitor

Watch run logs, error messages, and row counts from the Dataslayer dashboard. If a job fails, check API rate limits, date ranges, and any schema mismatches. - Verify in PostgreSQL

Confirm rows landed and freshness with simple queries, for example:

-- Rows landed?

SELECT COUNT(*) AS row_count FROM mkt.ga4_sessions_daily;

-- Latest date to confirm freshness

SELECT MAX(event_date) AS latest_loaded FROM mkt.ga4_sessions_daily;Modeling & governance best practices

Centralizing data is step one; turning it into trustworthy insight requires guardrails. These PostgreSQL modeling and governance practices add exactly that: clear structures, repeatable patterns, and simple checks, so your teams can move quickly without sacrificing quality.

- One table per grain and source: Keep ingestion tables narrow and predictable, for example, meta_ads_adset_daily. One table equals one grain, and one source makes joins and audits simpler.

- Incremental keys and upserts: Pick a clear, unique key to deduplicate, for example, date plus campaign_id. If you need a staging step, use it to validate rows before applying upserts to the final table.

- Indexes where it matters: Index the columns your queries actually use. Example:

CREATE INDEX idx_ga4_sessions_daily_date ON mkt.ga4_sessions_daily (event_date);- Partition large tables by month: Monthly partitions make maintenance easier and let you apply retention rules without heavy locks or long vacuum runs.

- Curated marts for BI: Build read-only, business-friendly views such as mart_campaign_performance_daily. Give analysts simple, stable tables to consume instead of raw ingestion schemas.

- Data dictionary: Keep a short catalog that covers table purpose, refresh cadence, and definitions for key metrics like spend and conversions. A few clear rows beat a huge, undocumented wiki.

- Access control: Give analysts SELECT on the marts and restrict write permissions to ingestion schemas only. That keeps accidental changes out of production data.

Common queries marketers love

Centralization makes analysis simple; you can combine web analytics, ad spend, and CRM data in a few lines of SQL. Below are common examples marketers run, beginning with GA4 sessions joined to ad spend for cost per session.

Blend GA4 + Ads to get cost per session

SELECT

d.date,

c.campaign_name,

SUM(c.spend) AS spend,

SUM(g.sessions) AS sessions,

CASE WHEN SUM(g.sessions) > 0

THEN SUM(c.spend)::numeric / SUM(g.sessions)

ELSE NULL

END AS cost_per_session

FROM mkt.google_ads_campaign_daily c

LEFT JOIN mkt.ga4_sessions_daily g

ON g.date = c.date

AND g.campaign_name = c.campaign_name

GROUP BY 1,2

ORDER BY 1 DESC, 2;Top campaigns last 30 days

SELECT

date_trunc('day', date) AS day,

campaign_name,

SUM(spend) AS spend,

SUM(clicks) AS clicks,

SUM(conversions) AS conversions

FROM mkt.google_ads_campaign_daily

WHERE date >= current_date - INTERVAL '30 day'

GROUP BY 1,2

ORDER BY day DESC, spend DESC;Troubleshooting checklist

Hiccups happen: timeouts, permissions, schema drift. Before diving into logs, scan this short checklist. It highlights the usual culprits so you can fix issues quickly and get back to analysis.

- Connection test fails: Re-check host, port, database name, user, and password. Verify SSL settings and confirm your firewall allowlist includes Dataslayer connector IPs.

- Permission errors: Make sure the role used for the load can CREATE, INSERT, UPDATE, and SELECT in the target schema. If needed, grant the specific privileges rather than using broad superuser access.

- Schema mismatches: If the table already exists, verify column names and types match the incoming mapping. Either adjust the mapping, alter the table, or recreate it with the correct schema.

- Slow syncs: Trim unneeded fields, add indexes on frequently queried columns, and consider partitioning high-volume tables by month. For very large backfills, split the export into smaller time windows.

- Unexpected nulls: Confirm the source actually provides those metrics or dimensions for the selected date range and accounts. Start with a minimal field set to isolate the problem before adding more columns.

Security & privacy notes

Before you load data into the database, take a moment to decide what you actually need to store and how you’ll protect it. This isn’t just a technical checkbox; choices about PII, masking or hashing, access controls, and backups affect both compliance and daily ops. Use the notes below as a practical checklist and share them with your ops or privacy team for review.

- You control what lands in the database. Only ingest the fields you actually need and avoid storing PII where possible.

- Mask or hash sensitive IDs before exposing them to broad analyst groups.

- Apply row-level or schema-level access to separate agency access from internal teams.

- Backups and disaster recovery are your responsibility. Schedule them to match how critical the data is to the business.

Not legal advice: make sure your configuration matches your privacy policy and applicable rules like GDPR. Have your ops or privacy team review retention settings and access controls.

FAQs

Why not just use GA4/Meta dashboards?

It's great for quick checks, but they limit history, can change at any time, and don’t blend cross-channel data. PostgreSQL centralizes everything with your rules.

Is this overkill for a small team?

No, PostgreSQL is lightweight, free, and scales. Start with daily syncs and a couple of core tables; expand as your reporting matures.

Can I keep near-real-time data?

Yes. Increase Dataslayer sync frequency (e.g., hourly). For true streaming needs, model a light incremental pattern and materialize aggregates.

What permissions should I grant?

A dedicated role with CREATE/INSERT/UPDATE/SELECT on the ingestion schema; BI users get SELECT on curated marts.

Will this work with my BI tool?

Yes. Tableau, Power BI, Looker Studio, Mode, notebooks, anything that talks to PostgreSQL.

.avif)