AI search platforms like ChatGPT and Perplexity are now driving real website traffic, not hypothetical future growth. Between January and May 2025, AI-referred sessions jumped 527% across tracked websites, with some SaaS companies seeing over 1% of total traffic from LLMs. Even better, visitors from LLMs convert 4.4 times better than traditional organic search visitors, despite arriving in smaller volumes. But most marketing teams can't answer basic questions like "Are we being cited?" or "Which content appears in AI responses?" This guide shows you exactly how to measure your visibility on ChatGPT and Perplexity using both free analytics setups and specialized tracking tools.

How Different Measurement Approaches Compare

Why Traditional Analytics Miss the Full Picture

When someone searches "best project management tools for startups" on Google, you can track that keyword in Search Console. When they ask the same question on ChatGPT, you're flying blind.

AI platforms don't send query data the way search engines do. They open links in new tabs, strip tracking parameters, or use APIs that make attribution nearly impossible. Even worse, AI tools can mention your brand, describe your product features, and influence purchase decisions without ever sending a single visitor to your site.

This creates two blind spots. First, you can't see citations without clicks. ChatGPT might recommend your SaaS product to 50 people asking about accounting software, but if only 3 click through, traditional analytics only shows those 3 visits.

Second, you don't know what prompts trigger mentions. Unlike Google Search Console showing you which keywords drive traffic, AI platforms don't reveal what questions led to their responses.

The solution isn't choosing between traditional SEO and AI visibility, 77% of AI optimization still comes from strong traditional SEO fundamentals. Pages ranking in Google's top 10 are significantly more likely to be cited by AI models. But you need new measurement approaches to see the complete picture.

Setting Up GA4 to Track ChatGPT and Perplexity Traffic

Start by seeing what traffic you're already getting from AI platforms. This free method takes 20 minutes and works for any website with Google Analytics 4.

Creating a Custom Channel Group

Custom channel groups let you track AI traffic as its own category instead of lumping it under generic "Referral" traffic. Here's the exact setup:

- Go to Admin (bottom left) > Channel groups under "Data Display"

- Click "Create new channel group" and name it "AI Traffic Channels"

- Click "Add new channel" and name it "AI"

- Under conditions, select "Source" then "matches regex"

- Paste this regex pattern:

^.*(chatgpt\.com|openai\.com|perplexity\.ai|perplexity|claude\.ai|

copilot\.microsoft\.com|gemini\.google\.com|mistral\.ai|deepseek\.com|you\.com).*- Click "Reorder" and drag your AI channel above "Referral", order matters because GA4 assigns traffic to the first matching rule

This setup captures traffic from major AI platforms and applies retroactively to your historical data. Update the regex every few months as new AI platforms launch.

One important limitation: Free ChatGPT users don't always send referrer data, so some AI clicks show up as "Direct" traffic.

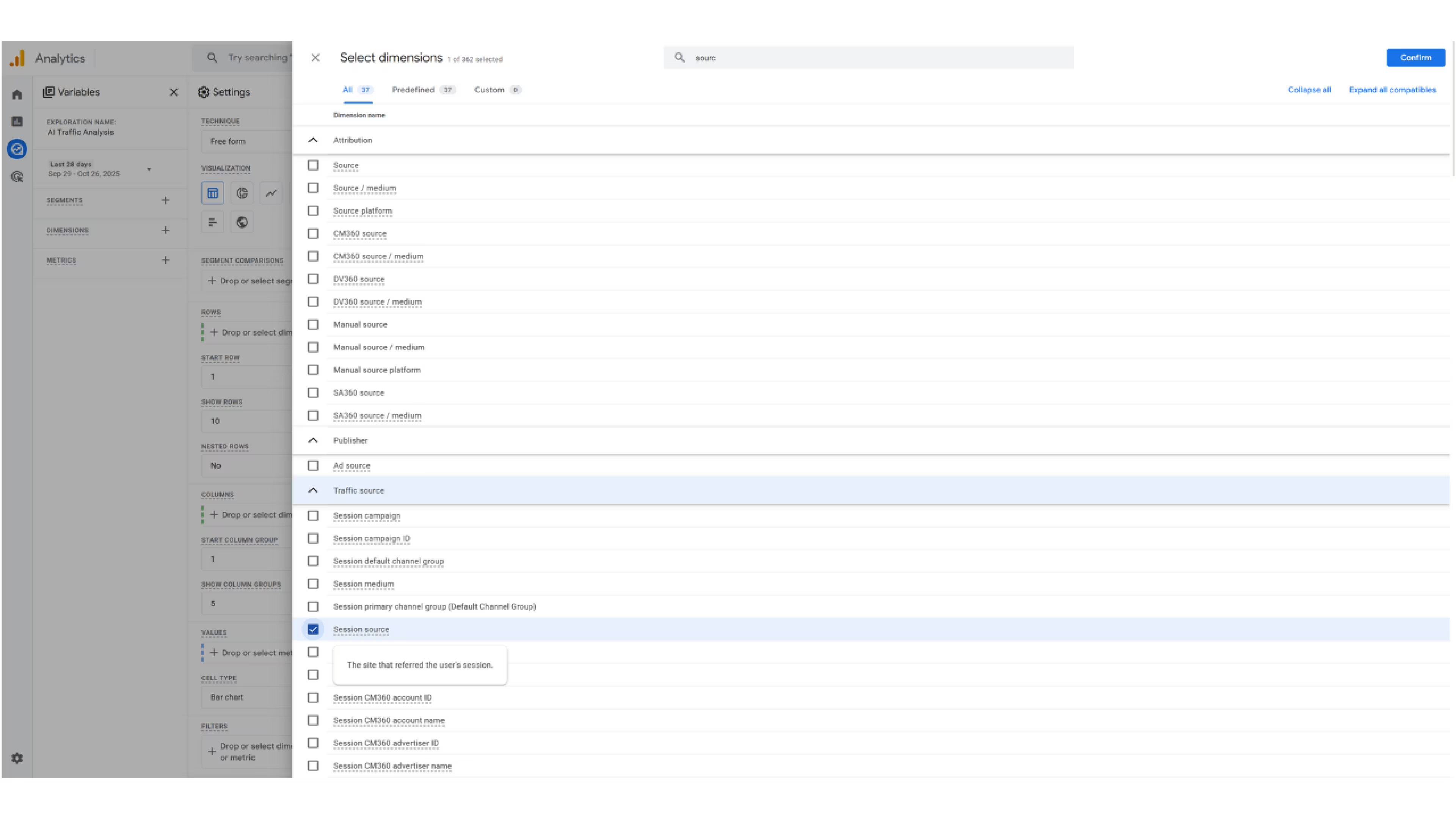

Building an Exploration Report for Deeper Analysis

Navigate to Explore > Create blank exploration. Name it "AI Traffic Analysis." Add these dimensions:

- Session source / medium

- Landing page + query string

- Device category

Add these metrics:

- Sessions

- Engagement rate

- Average session duration

- Conversions or key events

Under Filters, set Session source to "matches regex" and use the same pattern from above. Set your date range to "Last 90 days" minimum, AI traffic grows over time, so short date ranges hide trends.

Change visualization to a line chart with weekly granularity to spot growth patterns. You should see an upward trend if your content is gaining AI visibility.

Tools That Track AI Citations (Not Just Clicks)

GA4 shows who clicked through, but specialized tools reveal the full story: when AI platforms mention you, what they say, and how often you appear versus competitors.

Entry-Level: Free Manual Testing

Before spending on tools, test manually. Open ChatGPT and enable web search. Ask questions your target audience would actually type:

- "What are the best email marketing tools for small businesses?"

- "How to choose accounting software for freelancers?"

- "Top CRM platforms for real estate agents?"

Take notes on which brands appear and what context surrounds mentions. Run the same prompts in Perplexity and Gemini, each platform has different citation patterns. Research shows Reddit accounts for 46.7% of Perplexity citations but only 11.3% of ChatGPT citations.

This manual approach works for initial assessment but doesn't scale.

Mid-Tier: Prompt Tracking Platforms ($99-$300/month)

These platforms automate the manual testing process:

- Peec AI tracks your brand mentions across ChatGPT, Perplexity, Gemini, Claude, and DeepSeek. It runs your custom prompts daily and measures share of voice, sentiment, and which sources AI platforms cite when mentioning you.

- Otterly.AI focuses on both brand mentions and link citations across Google AI Overviews, ChatGPT, and Perplexity. Unlike pure LLM monitoring tools that only track model outputs, Otterly monitors AI search platforms that incorporate web results.

- Semrush AI SEO Toolkit includes prompt tracking alongside traditional SEO metrics. It monitors ChatGPT, Google AI Overviews, Google AI Mode, and Perplexity, showing share of voice versus competitors.

These tools answer questions GA4 can't: "Do we appear for 'project management software for agencies' prompts?" and "When we're mentioned, what do AI platforms actually say about us?"

Enterprise-Level Options ($500+/month)

- Rank Prompt offers the most complete LLM tracking, monitoring your brand, competitors, and specific URLs across all major AI interfaces. It provides share-of-voice dashboards and tracks how you're positioned when you do appear.

- Profound targets Fortune 1000 companies with features like multi-language tracking, compliance certifications (SOC 2 Type II), and misinformation detection.

- Keyword.com AI Tracker combines traditional rank tracking with AI visibility in one dashboard, letting you correlate SERP positions with ChatGPT or Perplexity citations.

The right tool depends on your situation. If you're just starting, use GA4 custom channels plus manual testing for 30 days. When AI traffic reaches 0.2% of total sessions or you need competitive intelligence, upgrade to dedicated tracking.

Creating Your Measurement Dashboard

Once you've set up tracking in multiple places, GA4, an LLM tool, maybe server logs, you need one place to see the complete picture.

For regular reporting, connect your data sources to a dashboard platform. If you're pulling AI traffic data from GA4 plus traditional SEO metrics from Search Console plus paid campaign data, tools like Dataslayer let you automate this into Google Sheets, Looker Studio, BigQuery, or Power BI. You can schedule automatic updates and build client-ready reports that combine AI visibility alongside other marketing channels.

The key metrics to track consistently:

Volume metrics:

- Total AI referral sessions (from GA4)

- Sessions by platform (ChatGPT vs Perplexity vs others)

- Growth rate month-over-month

Quality metrics:

- Conversion rate from AI traffic vs organic search

- Average engagement time

- Bounce rate

Visibility metrics:

- Number of tracked prompts where you appear

- Share of voice vs top 3 competitors

- Citation frequency (from LLM tools)

Content metrics:

- Which landing pages receive AI traffic

- Which topics generate citations

- Gap analysis, important prompts where you're missing

One B2B SaaS company tracking these metrics discovered their comparison pages drove 3x more AI traffic than product feature pages. They shifted content investment based on this insight.

What the Data Actually Tells You

Here's what surprises most marketing teams: ChatGPT primarily cites pages ranking in positions 21+ in traditional search nearly 90% of the time. You don't need top-3 Google rankings to dominate AI responses.

This flips traditional SEO strategy. A detailed help doc ranking #34 might never drive Google traffic but could appear in ChatGPT responses 50 times per month.

But AI traffic behaves differently than Google traffic. The conversion advantage is real. Multiple studies confirm AI visitors convert better, sometimes dramatically better. One analysis found that while AI traffic represented just 0.5% of total visitors for Ahrefs, it drove 12.1% more signups.

Session metrics look worse but mean something different. AI visitors typically browse fewer pages and spend less time on sites. This isn't bad, they've already done their research through the AI conversation. By the time they click through, they're evaluating pricing pages or signing up, not reading educational content.

Industry variation matters enormously. Legal, finance, healthcare, and insurance account for 55% of all LLM-driven sessions in recent studies. People use AI for complex, consultative questions.

Platform differences are bigger than expected. ChatGPT dominates volume (40-60% of all AI referral traffic), but Claude users show the highest session value at $4.56 per visit, followed by Perplexity at $3.12.

Common Tracking Problems and Fixes

Problem: GA4 shows almost no AI traffic

Likely cause: Free ChatGPT users don't consistently send referrer data. Their clicks often appear under "Direct" traffic instead.

Fix: Add UTM parameters when sharing links in your own ChatGPT usage or social posts. Use something like ?utm_source=chatgpt&utm_medium=ai-referral.

Problem: LLM tracking tool shows mentions, but GA4 shows zero traffic

This is actually normal. AI platforms mention brands without linking, or users read the information and never click. This is exactly why you need both measurement approaches, citations influence purchase decisions even without immediate clicks.

One SaaS company found ChatGPT mentioned their brand 400 times per month, but only 15-20 people clicked through. However, their sales team reported prospects frequently said "ChatGPT recommended you" during discovery calls.

Problem: Different tracking tools show wildly different numbers

LLM outputs are non-deterministic, the same prompt can generate different responses. Tools handle this by running prompts multiple times and calculating averages, but methodologies vary.

Focus on trends within a single tool rather than comparing absolute numbers across platforms. If your share of voice increases from 15% to 28% over three months in Peec AI, that's meaningful even if Profound shows different baseline numbers.

Six Mistakes That Invalidate Your Visibility Measurement

Mistake #1: Only tracking your own brand name

Branded searches like "how to use [your product]" will naturally show results about you. The real opportunity is non-branded prompts where buyers don't know about you yet. Track at least 70% non-branded prompts.

Mistake #2: Assuming high visibility equals success

A cybersecurity company appeared in 85% of prompts they tracked but conversion rate from AI traffic stayed near zero because AI positioned them as expensive. Measure visibility alongside outcomes, not as an isolated vanity metric.

Mistake #3: Ignoring which sources AI platforms cite

Research shows ChatGPT cites Wikipedia for 48% of references, while Perplexity cites Reddit for 46.7%. If your target prompts consistently cite sources you're not on, you found your visibility gap.

Mistake #4: Using regex patterns that miss emerging platforms

The AI landscape changes constantly. Review and update your GA4 regex patterns quarterly. Check your referral traffic report for unknown domains that look AI-related.

Mistake #5: Treating all AI platforms identically

ChatGPT, Perplexity, and Google AI Mode have different citation preferences, user demographics, and use cases. Content that wins in ChatGPT might perform poorly in Perplexity.

Mistake #6: Waiting for "enough" traffic before measuring

"We only get 20 visits per month from AI, so tracking isn't worth it yet." Wrong approach. Set up measurement infrastructure now. Even if current numbers are small, you'll have trend data showing growth trajectory.

What to Do With Your Visibility Data

Measurement without action wastes resources. Here's what actually moves the needle:

- Create content for citation-worthy topics. Your LLM tool shows which prompts mention competitors but never mention you. These are content gaps with clear ROI. Don't guess what to write, build what AI platforms are already asking about.

One B2B company found they never appeared for prompts about "integrations with Salesforce" despite having strong Salesforce connections. They published a detailed integration guide. Within 30 days, they started appearing in those AI responses. - Optimize existing high-performers. GA4 shows which landing pages already receive AI traffic. Add clearer structure with H2/H3 headers, bullet lists summarizing key points, and definitive statements AI platforms can quote.

- Address negative context. Sometimes you appear in AI responses but not favorably. If price concerns dominate mentions, publish transparent pricing guides or ROI calculators. AI platforms will cite this new context.

- Build attribution into your sales process. Add "How did you first hear about us?" to demo request forms with an open text field. Sales teams should ask "Have you researched solutions using AI tools?" during discovery calls. This captures influence that never appears in web analytics.

Frequently Asked Questions

How long does it take to see results from LLM optimization efforts?

Most sites see initial improvements within 14-30 days, with significant traffic increases after 60 days of consistent work. Within two weeks, you'll likely see your brand appearing in more prompt variations. Within 60 days, expect measurable increases in AI referral traffic in GA4 and shifts in share of voice versus competitors. Within 90+ days, look for conversion rate improvements from AI traffic and clear correlation between specific content updates and AI visibility gains. The timeline varies by industry, high-consultative sectors like legal and finance see faster traction.

Do I need expensive tools or can I just use Google Analytics?

Start with GA4 custom channel groups, this costs nothing and shows whether AI traffic matters for your business. If you're getting fewer than 50 AI sessions per month, free tracking is probably sufficient. Upgrade to paid LLM tracking tools when your AI traffic exceeds 0.2% of total sessions, you need competitive intelligence on share of voice, you're creating content specifically to improve AI visibility, or you need to report AI metrics to stakeholders regularly. Budget roughly $100-300/month for entry-level LLM tracking tools that monitor 50-200 prompts across multiple platforms.

Should I optimize for specific AI platforms or try to rank everywhere?

Prioritize platforms where your actual audience spends time. Check your GA4 data first, if 80% of your AI traffic comes from ChatGPT and Perplexity, focus there. That said, the fundamentals that improve visibility in one platform generally help across all platforms: clear content structure, authoritative sourcing, specific examples rather than vague statements, and comprehensive coverage of topics. If you must choose just one platform to optimize for initially, choose ChatGPT, it's 6x larger than Perplexity and drives 40-60% of all LLM traffic in most industries.

What metrics actually matter for measuring AI visibility success?

Avoid vanity metrics like "total mentions" or "visibility score" without context. Focus on these instead: share of voice in your category (what percentage of relevant prompts mention you versus top 3 competitors), qualified traffic growth (AI sessions increasing with engagement signals), conversion rate differential (are AI visitors converting better, worse, or similar to organic search), content efficiency (which pages drive the most AI traffic relative to their traditional organic traffic), and pipeline influence (for B2B, track how many deals involve buyers who mention AI research in sales conversations).

How do I convince stakeholders to invest in LLM visibility tracking?

Start with proof of existing impact using free methods. Set up GA4 custom channel groups and run a 30-day baseline. Frame the business case around risk and opportunity. Use these data points: AI traffic grew 527% in five months (Jan-May 2025), LLM visitors convert 4.4x better than organic search, ChatGPT now has 800 million weekly users and processes 2.5 billion prompts daily, and some SaaS companies already see over 1% of total traffic from LLMs. Propose a 90-day pilot: Set up free tracking, run manual tests monthly, document findings, and then request budget for paid tools based on what you learn.

Can AI platforms penalize me for optimization attempts?

No. AI platforms don't "penalize" in the way Google might for manipulative SEO tactics. They generally reward the same things users value: clear, accurate, well-sourced information that directly answers questions. That said, obvious attempts to game systems backfire. Keyword-stuffed content or made-for-AI pages with no human value will simply get ignored by AI platforms. The best approach: create genuinely useful content that humans want, then structure it so AI platforms can easily understand and cite it.

What happens to my AI visibility when platforms change their models?

Model updates do affect citation patterns. However, 77% of AI optimization comes from strong traditional SEO, which remains relatively stable across model updates. If your content is genuinely authoritative, well-structured, and answers important questions, temporary model changes cause small fluctuations, not catastrophic drops. Monitor visibility consistently so you can spot changes quickly. The companies that struggle most with model updates are those who relied on tricks or shortcuts.

Next Steps: Start Measuring Today

AI visibility measurement isn't optional anymore, it's a standard part of marketing analytics. Here's your implementation roadmap:

- Week 1: Set up GA4 custom channel groups for AI traffic. Takes 20 minutes, costs nothing, gives you a baseline.

- Week 2-4: Manually test 10-15 prompts your customers would actually ask. Document which competitors appear and what context surrounds mentions.

- Month 2: Review 30 days of GA4 data. If AI traffic exceeds 0.2% of total sessions or shows strong conversion rates, that's your signal to invest further.

- Month 3: If early indicators are positive, trial an LLM tracking tool like Peec AI, Otterly.AI, or Semrush AI SEO Toolkit for 30 days.

- Ongoing: Update your regex patterns quarterly, test new prompts monthly, and review landing page performance from AI traffic every 60 days.

The companies winning at AI visibility started tracking six months ago when the numbers were tiny. They now have trend data, know what content works, and understand their share of voice in their category.

Want to automate your AI traffic reporting alongside your other marketing data? Try Dataslayer free for 15 days to connect GA4, Search Console, and other data sources to Google Sheets, Looker Studio, BigQuery, or Power BI, saving hundreds of hours on manual reporting.